Polylogarithm

In mathematics, the polylogarithm (also known as Jonquière's function, for Alfred Jonquière) is a special function Lis(z) of order s and argument z. Only for special values of s does the polylogarithm reduce to an elementary function such as the natural logarithm or rational functions. In quantum statistics, the polylogarithm function appears as the closed form of integrals of the Fermi–Dirac distribution and the Bose–Einstein distribution, and is also known as the Fermi–Dirac integral or the Bose–Einstein integral. In quantum electrodynamics, polylogarithms of positive integer order arise in the calculation of processes represented by higher-order Feynman diagrams.

The polylogarithm function is equivalent to the Hurwitz zeta function — either function can be expressed in terms of the other — and both functions are special cases of the Lerch transcendent. Polylogarithms should not be confused with polylogarithmic functions nor with the offset logarithmic integral which has the same notation, but with one variable.

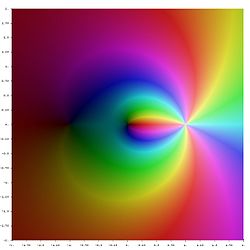

- Different polylogarithm functions in the complex plane

-

Li−3(z)

-

Li−2(z)

-

Li−1(z)

-

Li0(z)

-

Li1(z)

-

Li2(z)

-

Li3(z)

The polylogarithm function is defined by a power series in z, which is also a Dirichlet series in s:

This definition is valid for arbitrary complex order s and for all complex arguments z with |z| < 1; it can be extended to |z| ≥ 1 by the process of analytic continuation. The special case s = 1 involves the ordinary natural logarithm, Li1(z) = −ln(1−z), while the special cases s = 2 and s = 3 are called the dilogarithm (also referred to as Spence's function) and trilogarithm respectively. The name of the function comes from the fact that it may also be defined as the repeated integral of itself:

thus the dilogarithm is an integral of a function involving the logarithm, and so on. For nonpositive integer orders s, the polylogarithm is a rational function.

Properties

In the case where the polylogarithm order is an integer, it will be represented by (or when negative). It is often convenient to define where is the principal branch of the complex logarithm so that Also, all exponentiation will be assumed to be single-valued:

Depending on the order , the polylogarithm may be multi-valued. The principal branch of is taken to be given for by the above series definition and taken to be continuous except on the positive real axis, where a cut is made from to such that the axis is placed on the lower half plane of . In terms of , this amounts to . The discontinuity of the polylogarithm in dependence on can sometimes be confusing.

For real argument , the polylogarithm of real order is real if , and its imaginary part for is (Wood 1992, § 3):

Going across the cut, if ε is an infinitesimally small positive real number, then:

Both can be concluded from the series expansion (see below) of Lis(eµ) about µ = 0.

The derivatives of the polylogarithm follow from the defining power series:

The square relationship is seen from the series definition, and is related to the duplication formula (see also Clunie (1954), Schrödinger (1952)):

Kummer's function obeys a very similar duplication formula. This is a special case of the multiplication formula, for any positive integer p:

which can be proved using the series definition of the polylogarithm and the orthogonality of the exponential terms (see e.g. discrete Fourier transform).

Another important property, the inversion formula, involves the Hurwitz zeta function or the Bernoulli polynomials and is found under relationship to other functions below.

Particular values

For particular cases, the polylogarithm may be expressed in terms of other functions (see below). Particular values for the polylogarithm may thus also be found as particular values of these other functions.

1. For integer values of the polylogarithm order, the following explicit expressions are obtained by repeated application of z·∂/∂z to Li1(z):

Accordingly the polylogarithm reduces to a ratio of polynomials in z, and is therefore a rational function of z, for all nonpositive integer orders. The general case may be expressed as a finite sum:

where S(n,k) are the Stirling numbers of the second kind. Equivalent formulae applicable to negative integer orders are (Wood 1992, § 6):

and:

where are the Eulerian numbers. All roots of Li−n(z) are distinct and real; they include z = 0, while the remainder is negative and centered about z = −1 on a logarithmic scale. As n becomes large, the numerical evaluation of these rational expressions increasingly suffers from cancellation (Wood 1992, § 6); full accuracy can be obtained, however, by computing Li−n(z) via the general relation with the Hurwitz zeta function (see below).

2. Some particular expressions for half-integer values of the argument z are:

where ζ is the Riemann zeta function. No formulae of this type are known for higher integer orders (Lewin 1991, p. 2), but one has for instance (Borwein, Borwein & Girgensohn 1995):

which involves the alternating double sum

In general one has for integer orders n ≥ 2 (Broadhurst 1996, p. 9):

where ζ(s1, ..., sk) is the multiple zeta function; for example:

3. As a straightforward consequence of the series definition, values of the polylogarithm at the pth complex roots of unity are given by the Fourier sum:

where ζ is the Hurwitz zeta function. For Re(s) > 1, where Lis(1) is finite, the relation also holds with m = 0 or m = p. While this formula is not as simple as that implied by the more general relation with the Hurwitz zeta function listed under relationship to other functions below, it has the advantage of applying to non-negative integer values of s as well. As usual, the relation may be inverted to express ζ(s, m⁄p) for any m = 1, ..., p as a Fourier sum of Lis(exp(2πi k⁄p)) over k = 1, ..., p.

Relationship to other functions

- For z = 1 the polylogarithm reduces to the Riemann zeta function

- The polylogarithm is related to Dirichlet eta function and the Dirichlet beta function:

- where η(s) is the Dirichlet eta function. For pure imaginary arguments, we have:

- where β(s) is the Dirichlet beta function.

- The polylogarithm is related to the complete Fermi–Dirac integral as:

- The polylogarithm is a special case of the incomplete polylogarithm function

- The polylogarithm is a special case of the Lerch transcendent (Erdélyi et al. 1981, § 1.11-14)

- The polylogarithm is related to the Hurwitz zeta function by:

- which relation, however, is invalidated at positive integer s by poles of the gamma function Γ(1−s), and at s = 0 by a pole of both zeta functions; a derivation of this formula is given under series representations below. With a little help from a functional equation for the Hurwitz zeta function, the polylogarithm is consequently also related to that function via (Jonquière 1889):

- which relation holds for 0 ≤ Re(x) < 1 if Im(x) ≥ 0, and for 0 < Re(x) ≤ 1 if Im(x) < 0. Equivalently, for all complex s and for complex z ∉ ]0;1], the inversion formula reads

- and for all complex s and for complex z ∉ ]1;∞[

- For z ∉ ]0;∞[ one has ln(−z) = −ln(−1⁄z), and both expressions agree. These relations furnish the analytic continuation of the polylogarithm beyond the circle of convergence |z| = 1 of the defining power series. (The corresponding equation of Jonquière (1889, eq. 5) and Erdélyi et al. (1981, § 1.11-16) is not correct if one assumes that the principal branches of the polylogarithm and the logarithm are used simultaneously.) See the next item for a simplified formula when s is an integer.

- For positive integer polylogarithm orders s, the Hurwitz zeta function ζ(1−s, x) reduces to Bernoulli polynomials, ζ(1−n, x) = −Bn(x) / n, and Jonquière's inversion formula for n = 1, 2, 3, ... becomes:

- where again 0 ≤ Re(x) < 1 if Im(x) ≥ 0, and 0 < Re(x) ≤ 1 if Im(x) < 0. Upon restriction of the polylogarithm argument to the unit circle, Im(x) = 0, the left hand side of this formula simplifies to 2 Re(Lin(e2πix)) if n is even, and to 2i Im(Lin(e2πix)) if n is odd. For negative integer orders, on the other hand, the divergence of Γ(s) implies for all z that (Erdélyi et al. 1981, § 1.11-17):

- More generally one has for n = 0, ±1, ±2, ±3, ... :

- where both expressions agree for z ∉ ]0;∞[. (The corresponding equation of Jonquière (1889, eq. 1) and Erdélyi et al. (1981, § 1.11-18) is again not correct.)

- The polylogarithm with pure imaginary μ may be expressed in terms of the Clausen functions Cis(θ) and Sis(θ), and vice versa (Lewin 1958, Ch. VII § 1.4; Abramowitz & Stegun 1972, § 27.8):

- The inverse tangent integral Tis(z) (Lewin 1958, Ch. VII § 1.2) can be expressed in terms of polylogarithms:

- The relation in particular implies:

- which explains the function name.

- The Legendre chi function χs(z) (Lewin 1958, Ch. VII § 1.1; Boersma & Dempsey 1992) can be expressed in terms of polylogarithms:

- The polylogarithm of integer order can be expressed as a generalized hypergeometric function:

- In terms of the incomplete zeta functions or "Debye functions" (Abramowitz & Stegun 1972, § 27.1):

- the polylogarithm Lin(z) for positive integer n may be expressed as the finite sum (Wood 1992, § 16):

- A remarkably similar expression relates the "Debye functions" Zn(z) to the polylogarithm:

Integral representations

Any of the following integral representations furnishes the analytic continuation of the polylogarithm beyond the circle of convergence |z| = 1 of the defining power series.

1. The polylogarithm can be expressed in term of the integral of the Bose–Einstein distribution:

This converges for Re(s) > 0 and all z except for z real and ≥ 1. The polylogarithm in this context is sometimes referred to as a Bose integral but more commonly as a Bose–Einstein integral.[1] Similarly, the polylogarithm can be expressed in terms of the integral of the Fermi–Dirac distribution:

This converges for Re(s) > 0 and all z except for z real and ≤ −1. The polylogarithm in this context is sometimes referred to as a Fermi integral or a Fermi–Dirac integral[2] (GSL 2010). These representations are readily verified by Taylor expansion of the integrand with respect to z and termwise integration. The papers of Dingle contain detailed investigations of both types of integrals.

The polylogarithm is also related to the integral of the Maxwell–Boltzmann distribution:

This also gives the asymptotic behavior of polylogarithm at the vicinity of origin.

2. A complementary integral representation applies to Re(s) < 0 and to all z except to z real and ≥ 0:

This integral follows from the general relation of the polylogarithm with the Hurwitz zeta function (see above) and a familiar integral representation of the latter.

3. The polylogarithm may be quite generally represented by a Hankel contour integral (Whittaker & Watson 1927, § 12.22, § 13.13), which extends the Bose–Einstein representation to negative orders s. As long as the t = μ pole of the integrand does not lie on the non-negative real axis, and s ≠ 1, 2, 3, ..., we have:

where H represents the Hankel contour. The integrand has a cut along the real axis from zero to infinity, with the axis belonging to the lower half plane of t. The integration starts at +∞ on the upper half plane (Im(t) > 0), circles the origin without enclosing any of the poles t = µ + 2kπi, and terminates at +∞ on the lower half plane (Im(t) < 0). For the case where µ is real and non-negative, we can simply subtract the contribution of the enclosed t = µ pole:

where R is the residue of the pole:

4. When the Abel–Plana formula is applied to the defining series of the polylogarithm, a Hermite-type integral representation results that is valid for all complex z and for all complex s:

where Γ is the upper incomplete gamma-function. All (but not part) of the ln(z) in this expression can be replaced by −ln(1⁄z). A related representation which also holds for all complex s,

avoids the use of the incomplete gamma function, but this integral fails for z on the positive real axis if Re(s) ≤ 0. This expression is found by writing 2s Lis(−z) / (−z) = Φ(z2, s, 1⁄2) − z Φ(z2, s, 1), where Φ is the Lerch transcendent, and applying the Abel–Plana formula to the first Φ series and a complementary formula that involves 1 / (e2πt + 1) in place of 1 / (e2πt − 1) to the second Φ series.

5. As cited in,[3] we can express an integral for the polylogarithm by integrating the ordinary geometric series termwise for as

Series representations

1. As noted under integral representations above, the Bose–Einstein integral representation of the polylogarithm may be extended to negative orders s by means of Hankel contour integration:

where H is the Hankel contour, s ≠ 1, 2, 3, ..., and the t = μ pole of the integrand does not lie on the non-negative real axis. The contour can be modified so that it encloses the poles of the integrand at t − µ = 2kπi, and the integral can be evaluated as the sum of the residues (Wood 1992, § 12, 13; Gradshteyn & Ryzhik 1980, § 9.553):

This will hold for Re(s) < 0 and all μ except where eμ = 1. For 0 < Im(µ) ≤ 2π the sum can be split as:

where the two series can now be identified with the Hurwitz zeta function:

This relation, which has already been given under relationship to other functions above, holds for all complex s ≠ 0, 1, 2, 3, ... and was first derived in (Jonquière 1889, eq. 6).

2. In order to represent the polylogarithm as a power series about µ = 0, we write the series derived from the Hankel contour integral as:

When the binomial powers in the sum are expanded about µ = 0 and the order of summation is reversed, the sum over h can be expressed in closed form:

This result holds for |µ| < 2π and, thanks to the analytic continuation provided by the zeta functions, for all s ≠ 1, 2, 3, ... . If the order is a positive integer, s = n, both the term with k = n − 1 and the gamma function become infinite, although their sum does not. One obtains (Wood 1992, § 9; Gradshteyn & Ryzhik 1980, § 9.554):

where the sum over h vanishes if k = 0. So, for positive integer orders and for |μ| < 2π we have the series:

where Hn denotes the nth harmonic number:

The problem terms now contain −ln(−μ) which, when multiplied by μn−1, will tend to zero as μ → 0, except for n = 1. This reflects the fact that Lis(z) exhibits a true logarithmic singularity at s = 1 and z = 1 since:

For s close, but not equal, to a positive integer, the divergent terms in the expansion about µ = 0 can be expected to cause computational difficulties (Wood 1992, § 9). Erdélyi's corresponding expansion (Erdélyi et al. 1981, § 1.11-15) in powers of ln(z) is not correct if one assumes that the principal branches of the polylogarithm and the logarithm are used simultaneously, since ln(1⁄z) is not uniformly equal to −ln(z).

For nonpositive integer values of s, the zeta function ζ(s − k) in the expansion about µ = 0 reduces to Bernoulli numbers: ζ(−n − k) = −B1+n+k / (1 + n + k). Numerical evaluation of Li−n(z) by this series does not suffer from the cancellation effects that the finite rational expressions given under particular values above exhibit for large n.

3. By use of the identity

the Bose–Einstein integral representation of the polylogarithm (see above) may be cast in the form:

Replacing the hyperbolic cotangent with a bilateral series,

then reversing the order of integral and sum, and finally identifying the summands with an integral representation of the upper incomplete gamma function, one obtains:

For both the bilateral series of this result and that for the hyperbolic cotangent, symmetric partial sums from −kmax to kmax converge unconditionally as kmax → ∞. Provided the summation is performed symmetrically, this series for Lis(z) thus holds for all complex s as well as all complex z.

4. Introducing an explicit expression for the Stirling numbers of the second kind into the finite sum for the polylogarithm of nonpositive integer order (see above) one may write:

The infinite series obtained by simply extending the outer summation to ∞ (Guillera & Sondow 2008, Theorem 2.1):

turns out to converge to the polylogarithm for all complex s and for complex z with Re(z) < 1⁄2, as can be verified for |−z⁄(1−z)| < 1⁄2 by reversing the order of summation and using:

The inner coefficients of these series can be expressed by Stirling-number-related formulas involving the generalized harmonic numbers. For example, see generating function transformations to find proofs (references to proofs) of the following identities:

For the other arguments with Re(z) < 1⁄2 the result follows by analytic continuation. This procedure is equivalent to applying Euler's transformation to the series in z that defines the polylogarithm.

Asymptotic expansions

For |z| ≫ 1, the polylogarithm can be expanded into asymptotic series in terms of ln(−z):

where B2k are the Bernoulli numbers. Both versions hold for all s and for any arg(z). As usual, the summation should be terminated when the terms start growing in magnitude. For negative integer s, the expansions vanish entirely; for non-negative integer s, they break off after a finite number of terms. Wood (1992, § 11) describes a method for obtaining these series from the Bose–Einstein integral representation (his equation 11.2 for Lis(eµ) requires −2π < Im(µ) ≤ 0).

Limiting behavior

The following limits result from the various representations of the polylogarithm (Wood 1992, § 22):

Wood's first limit for Re(µ) → ∞ has been corrected in accordance with his equation 11.3. The limit for Re(s) → −∞ follows from the general relation of the polylogarithm with the Hurwitz zeta function (see above).

Dilogarithm

The dilogarithm is the polylogarithm of order s = 2. An alternate integral expression of the dilogarithm for arbitrary complex argument z is (Abramowitz & Stegun 1972, § 27.7):

A source of confusion is that some computer algebra systems define the dilogarithm as dilog(z) = Li2(1−z).

In the case of real z ≥ 1 the first integral expression for the dilogarithm can be written as

from which expanding ln(t−1) and integrating term by term we obtain

The Abel identity for the dilogarithm is given by (Abel 1881)

This is immediately seen to hold for either x = 0 or y = 0, and for general arguments is then easily verified by differentiation ∂/∂x ∂/∂y. For y = 1−x the identity reduces to Euler's reflection formula

where Li2(1) = ζ(2) = 1⁄6 π2 has been used and x may take any complex value.

In terms of the new variables u = x/(1−y), v = y/(1−x) the Abel identity reads

which corresponds to the pentagon identity given in (Rogers 1907).

From the Abel identity for x = y = 1−z and the square relationship we have Landen's identity

and applying the reflection formula to each dilogarithm we find the inversion formula

and for real z ≥ 1 also

Known closed-form evaluations of the dilogarithm at special arguments are collected in the table below. Arguments in the first column are related by reflection x ↔ 1−x or inversion x ↔ 1⁄x to either x = 0 or x = −1; arguments in the third column are all interrelated by these operations.

Maximon (2003) discusses the 17th to 19th century references. The reflection formula was already published by Landen in 1760, prior to its appearance in a 1768 book by Euler (Maximon 2003, § 10); an equivalent to Abel's identity was already published by Spence in 1809, before Abel wrote his manuscript in 1826 (Zagier 1989, § 2). The designation bilogarithmische Function was introduced by Carl Johan Danielsson Hill (professor in Lund, Sweden) in 1828 (Maximon 2003, § 10). Don Zagier (1989) has remarked that the dilogarithm is the only mathematical function possessing a sense of humor.

- Here denotes the golden ratio.

Polylogarithm ladders

Leonard Lewin discovered a remarkable and broad generalization of a number of classical relationships on the polylogarithm for special values. These are now called polylogarithm ladders. Define as the reciprocal of the golden ratio. Then two simple examples of dilogarithm ladders are

given by Landen. Polylogarithm ladders occur naturally and deeply in K-theory and algebraic geometry. Polylogarithm ladders provide the basis for the rapid computations of various mathematical constants by means of the BBP algorithm (Bailey, Borwein & Plouffe 1997).

Monodromy

The polylogarithm has two branch points; one at z = 1 and another at z = 0. The second branch point, at z = 0, is not visible on the main sheet of the polylogarithm; it becomes visible only when the function is analytically continued to its other sheets. The monodromy group for the polylogarithm consists of the homotopy classes of loops that wind around the two branch points. Denoting these two by m0 and m1, the monodromy group has the group presentation

For the special case of the dilogarithm, one also has that wm0 = m0w, and the monodromy group becomes the Heisenberg group (identifying m0, m1 and w with x, y, z) (Vepstas 2008).

References

- Abel, N.H. (1881) [1826]. "Note sur la fonction " (PDF). In Sylow, L.; Lie, S. (eds.). Œuvres complètes de Niels Henrik Abel − Nouvelle édition, Tome II (in French). Christiania [Oslo]: Grøndahl & Søn. pp. 189–193.CS1 maint: ref=harv (link) CS1 maint: unrecognized language (link) (this 1826 manuscript was only published posthumously.)

- Abramowitz, M.; Stegun, I.A. (1972). Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables. New York: Dover Publications. ISBN 978-0-486-61272-0.CS1 maint: ref=harv (link)

- Apostol, T.M. (2010), "Polylogarithm", in Olver, Frank W. J.; Lozier, Daniel M.; Boisvert, Ronald F.; Clark, Charles W. (eds.), NIST Handbook of Mathematical Functions, Cambridge University Press, ISBN 978-0-521-19225-5, MR 2723248CS1 maint: ref=harv (link)

- Bailey, D.H.; Borwein, P.B.; Plouffe, S. (April 1997). "On the Rapid Computation of Various Polylogarithmic Constants" (PDF). Mathematics of Computation. 66 (218): 903–913. Bibcode:1997MaCom..66..903B. doi:10.1090/S0025-5718-97-00856-9.CS1 maint: ref=harv (link)

- Bailey, D.H.; Broadhurst, D.J. (June 20, 1999). "A Seventeenth-Order Polylogarithm Ladder". arXiv:math.CA/9906134.CS1 maint: ref=harv (link)

- Berndt, B.C. (1994). Ramanujan's Notebooks, Part IV. New York: Springer-Verlag. pp. 323–326. ISBN 978-0-387-94109-7.CS1 maint: ref=harv (link)

- Boersma, J.; Dempsey, J.P. (1992). "On the evaluation of Legendre's chi-function". Mathematics of Computation. 59 (199): 157–163. doi:10.2307/2152987. JSTOR 2152987.CS1 maint: ref=harv (link)

- Borwein, D.; Borwein, J.M.; Girgensohn, R. (1995). "Explicit evaluation of Euler sums" (PDF). Proceedings of the Edinburgh Mathematical Society. Series 2. 38 (2): 277–294. doi:10.1017/S0013091500019088.CS1 maint: ref=harv (link)

- Borwein, J.M.; Bradley, D.M.; Broadhurst, D.J.; Lisonek, P. (2001). "Special Values of Multiple Polylogarithms". Transactions of the American Mathematical Society. 353 (3): 907–941. arXiv:math/9910045. doi:10.1090/S0002-9947-00-02616-7.CS1 maint: ref=harv (link)

- Broadhurst, D.J. (April 21, 1996). "On the enumeration of irreducible k-fold Euler sums and their roles in knot theory and field theory". arXiv:hep-th/9604128.CS1 maint: ref=harv (link)

- Clunie, J. (1954). "On Bose-Einstein functions". Proceedings of the Physical Society. Series A. 67 (7): 632–636. Bibcode:1954PPSA...67..632C. doi:10.1088/0370-1298/67/7/308.CS1 maint: ref=harv (link)

- Cohen, H.; Lewin, L.; Zagier, D. (1992). "A Sixteenth-Order Polylogarithm Ladder" (PS). Experimental Mathematics. 1 (1): 25–34.CS1 maint: ref=harv (link)

- Coxeter, H.S.M. (1935). "The functions of Schläfli and Lobatschefsky". Quarterly Journal of Mathematics (Oxford). 6 (1): 13–29. Bibcode:1935QJMat...6...13C. doi:10.1093/qmath/os-6.1.13. JFM 61.0395.02.CS1 maint: ref=harv (link)

- Cvijovic, D.; Klinowski, J. (1997). "Continued-fraction expansions for the Riemann zeta function and polylogarithms" (PDF). Proceedings of the American Mathematical Society. 125 (9): 2543–2550. doi:10.1090/S0002-9939-97-04102-6.CS1 maint: ref=harv (link)

- Cvijovic, D. (2007). "New integral representations of the polylogarithm function". Proceedings of the Royal Society A. 463 (2080): 897–905. arXiv:0911.4452. Bibcode:2007RSPSA.463..897C. doi:10.1098/rspa.2006.1794.CS1 maint: ref=harv (link)

- Erdélyi, A.; Magnus, W.; Oberhettinger, F.; Tricomi, F.G. (1981). Higher Transcendental Functions, Vol. 1 (PDF). Malabar, FL: R.E. Krieger Publishing. ISBN 978-0-89874-206-0. (this is a reprint of the McGraw–Hill original of 1953.)

- Fornberg, B.; Kölbig, K.S. (1975). "Complex zeros of the Jonquière or polylogarithm function". Mathematics of Computation. 29 (130): 582–599. doi:10.2307/2005579. JSTOR 2005579.CS1 maint: ref=harv (link)

- GNU Scientific Library (2010). "Reference Manual". Retrieved 2010-06-13.

- Gradshteyn, Izrail Solomonovich; Ryzhik, Iosif Moiseevich; Geronimus, Yuri Veniaminovich; Tseytlin, Michail Yulyevich; Jeffrey, Alan (2015) [October 2014]. "9.553.". In Zwillinger, Daniel; Moll, Victor Hugo (eds.). Table of Integrals, Series, and Products. Translated by Scripta Technica, Inc. (8 ed.). Academic Press, Inc. p. 1050. ISBN 978-0-12-384933-5. LCCN 2014010276.CS1 maint: ref=harv (link)

- Guillera, J.; Sondow, J. (2008). "Double integrals and infinite products for some classical constants via analytic continuations of Lerch's transcendent". The Ramanujan Journal. 16 (3): 247–270. arXiv:math.NT/0506319. doi:10.1007/s11139-007-9102-0.CS1 maint: ref=harv (link)

- Hain, R.M. (March 25, 1992). "Classical polylogarithms". arXiv:alg-geom/9202022.CS1 maint: ref=harv (link)

- Jahnke, E.; Emde, F. (1945). Tables of Functions with Formulae and Curves (4th ed.). New York: Dover Publications.CS1 maint: ref=harv (link)

- Jonquière, A. (1889). "Note sur la série " (PDF). Bulletin de la Société Mathématique de France (in French). 17: 142–152. doi:10.24033/bsmf.392. JFM 21.0246.02.CS1 maint: ref=harv (link) CS1 maint: unrecognized language (link)

- Kölbig, K.S.; Mignaco, J.A.; Remiddi, E. (1970). "On Nielsen's generalized polylogarithms and their numerical calculation". BIT. 10: 38–74. doi:10.1007/BF01940890.CS1 maint: ref=harv (link)

- Kirillov, A.N. (1995). "Dilogarithm identities". Progress of Theoretical Physics Supplement. 118: 61–142. arXiv:hep-th/9408113. Bibcode:1995PThPS.118...61K. doi:10.1143/PTPS.118.61.CS1 maint: ref=harv (link)

- Lewin, L. (1958). Dilogarithms and Associated Functions. London: Macdonald. MR 0105524.CS1 maint: ref=harv (link)

- Lewin, L. (1981). Polylogarithms and Associated Functions. New York: North-Holland. ISBN 978-0-444-00550-2.CS1 maint: ref=harv (link)

- Lewin, L., ed. (1991). Structural Properties of Polylogarithms. Mathematical Surveys and Monographs. 37. Providence, RI: Amer. Math. Soc. ISBN 978-0-8218-1634-9.CS1 maint: ref=harv (link)

- Markman, B. (1965). "The Riemann Zeta Function". BIT. 5: 138–141.CS1 maint: ref=harv (link)

- Maximon, L.C. (2003). "The Dilogarithm Function for Complex Argument". Proceedings of the Royal Society A. 459 (2039): 2807–2819. Bibcode:2003RSPSA.459.2807M. doi:10.1098/rspa.2003.1156.CS1 maint: ref=harv (link)

- McDougall, J.; Stoner, E.C. (1938). "The computation of Fermi-Dirac functions". Philosophical Transactions of the Royal Society A. 237 (773): 67–104. Bibcode:1938RSPTA.237...67M. doi:10.1098/rsta.1938.0004. JFM 64.1500.04.CS1 maint: ref=harv (link)

- Nielsen, N. (1909). "Der Eulersche Dilogarithmus und seine Verallgemeinerungen. Eine Monographie". Nova Acta Leopoldina (in German). Halle – Leipzig, Germany: Kaiserlich-Leopoldinisch-Carolinische Deutsche Akademie der Naturforscher. XC (3): 121–212. JFM 40.0478.01.CS1 maint: ref=harv (link) CS1 maint: unrecognized language (link)

- Prudnikov, A.P.; Marichev, O.I.; Brychkov, Yu.A. (1990). Integrals and Series, Vol. 3: More Special Functions. Newark, NJ: Gordon and Breach. ISBN 978-2-88124-682-1.CS1 maint: ref=harv (link) (see § 1.2, "The generalized zeta function, Bernoulli polynomials, Euler polynomials, and polylogarithms", p. 23.)

- Robinson, J.E. (1951). "Note on the Bose-Einstein integral functions". Physical Review. Series 2. 83 (3): 678–679. Bibcode:1951PhRv...83..678R. doi:10.1103/PhysRev.83.678.CS1 maint: ref=harv (link)

- Rogers, L.J. (1907). "On function sum theorems connected with the series ". Proceedings of the London Mathematical Society (2). 4 (1): 169–189. doi:10.1112/plms/s2-4.1.169. JFM 37.0428.03.CS1 maint: ref=harv (link)

- Schrödinger, E. (1952). Statistical Thermodynamics (2nd ed.). Cambridge, UK: Cambridge University Press.CS1 maint: ref=harv (link)

- Truesdell, C. (1945). "On a function which occurs in the theory of the structure of polymers". Annals of Mathematics. Second Series. 46 (1): 144–157. doi:10.2307/1969153. JSTOR 1969153.CS1 maint: ref=harv (link)

- Vepstas, L. (2008). "An efficient algorithm for accelerating the convergence of oscillatory series, useful for computing the polylogarithm and Hurwitz zeta functions". Numerical Algorithms. 47 (3): 211–252. arXiv:math.CA/0702243. Bibcode:2008NuAlg..47..211V. doi:10.1007/s11075-007-9153-8.CS1 maint: ref=harv (link)

- Whittaker, E.T.; Watson, G.N. (1927). A Course of Modern Analysis (4th ed.). Cambridge, UK: Cambridge University Press.CS1 maint: ref=harv (link) (this edition has been reprinted many times, a 1996 paperback has ISBN 0-521-09189-6.)

- Wirtinger, W. (1905). "Über eine besondere Dirichletsche Reihe". Journal für die Reine und Angewandte Mathematik (in German). 1905 (129): 214–219. doi:10.1515/crll.1905.129.214. JFM 37.0434.01.CS1 maint: ref=harv (link) CS1 maint: unrecognized language (link)

- Wood, D.C. (June 1992). "The Computation of Polylogarithms. Technical Report 15-92*" (PS). Canterbury, UK: University of Kent Computing Laboratory. Retrieved 2005-11-01.CS1 maint: ref=harv (link)

- Zagier, D. (1989). "The dilogarithm function in geometry and number theory". Number Theory and Related Topics: papers presented at the Ramanujan Colloquium, Bombay, 1988. Studies in Mathematics. 12. Bombay: Tata Institute of Fundamental Research and Oxford University Press. pp. 231–249. ISBN 0-19-562367-3.CS1 maint: ref=harv (link) (also appeared as "The remarkable dilogarithm" in Journal of Mathematical and Physical Sciences 22 (1988), pp. 131–145, and as Chapter I of (Zagier 2007).)

- Zagier, D. (2007). "The Dilogarithm Function" (PDF). In Cartier, P.E.; et al. (eds.). Frontiers in Number Theory, Physics, and Geometry II – On Conformal Field Theories, Discrete Groups and Renormalization. Berlin: Springer-Verlag. pp. 3–65. ISBN 978-3-540-30307-7.CS1 maint: ref=harv (link)

External links

- Weisstein, Eric W. "Polylogarithm". MathWorld.

- Weisstein, Eric W. "Dilogarithm". MathWorld.

- Algorithms in Analytic Number Theory provides an arbitrary-precision, GMP-based, GPL-licensed implementation.

- Use American English from March 2019

- Articles with invalid date parameter in template

- All Wikipedia articles written in American English

- Articles with short description

- Short description with empty Wikidata description

- Harv and Sfn no-target errors

- CS1 maint: ref=harv

- CS1 maint: unrecognized language

- Special functions

- Zeta and L-functions

- Rational functions