Do the Math: Make Mathematics in Wikipedia Computable

This wiki supports an anonymous submission to ACM SIGIR 2021[1]. Since the SIGIR conference uses a double-blind review system, the identity of the authors is hidden. For legal inquiries, use the methods described at https://wikitech.wikimedia.org/.

In the following, we demonstrate the capabilities of our system based on a subset of articles copied from the English version of Wikipedia. The full list of all demo pages can be viewed here: Special:AllPages.

Sincerely, the anonymous authors.

Explore the Demo

Click on a Formula

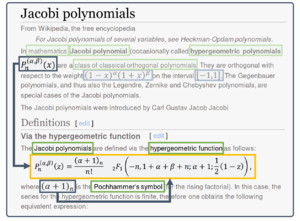

You can go to any of our demo pages and click on a formula. This leads to a special page that shows you the information and translations for the formula you clicked. As a good starting point, you can go to our use case example about Jacobi polynomials and click on the definition of the Jacobi polynomials.

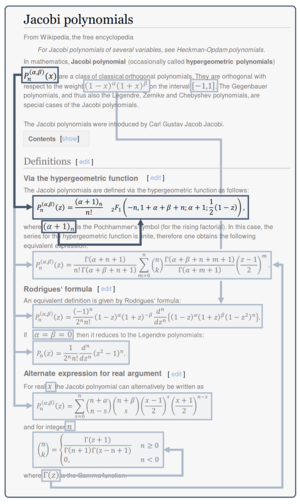

The Jacobi polynomials are defined via the hypergeometric function as follows:

where is Pochhammer's symbol (for the rising factorial).

The information and translations are generated based on the context of the formula, i.e., the article in which the formula appeared in. Consequently, clicking on the same formula in different articles may yield different results.

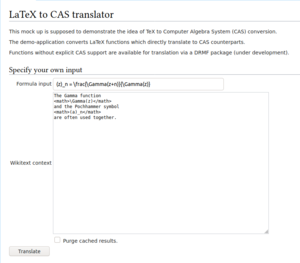

Setup Your Own Scenario

In addition, you can go to the special page directly and enter your own context and formula. Note that the given formula does not necessarily need to be in the provided context. Since the formula will be integrated into the dependency graph first, the necessary descriptive terms will be extracted from the ingoing dependencies.

Translation Pipeline

n(x) would be annotated with all descriptive terms (green) from the sentence in the introduction and the sentence in the definition of the Jacobi polynomial.

In the following, we briefly explain the translation process on an example. Because of page limitations, we did not put the example in our submission.

Example Translation

Consider the English Wikipedia article about Jacobi polynomials as our exemplary use case. The Figure on the right shows the dependency graph overlay for the first equation in that article. Consider further that we want to translate the equation

The dependency graph tells us that the equation contains two other MOI, namely from earlier in the article and right below the equation, while the definition itself is not part of any other MOI (no outgoing dependencies). Hence, the annotated descriptive terms for the equation are only the noun phrases extracted from the sentence the equation appears in. These noun phrases are Pochhammer's symbol (0.69), hypergeometric function (0.6), and Jacobi polynomial (0.6). Note that the term rising factorial at the end of the sentence is not included. Because of the aforementioned challenges in processing mathematical language (see Section 2.1 in our paper), CoreNLP tagged factorial as an adjective instead of a noun and, therefore, the phrase was not considered as a noun phrase.

Next we search for semantic macros with the descriptive terms annotated to the definition in the dependency graph and find \JacobipolyP for Jacobi polynomials, \Pochhammersym for the Pochhammer's symbol, and \genhyperF for hypergeometric function. Each retrieved macro contains a list of possible replacement patterns. Finally, we score each replacement pattern by the score that was generated from MLP for the descriptive term, the search score from ES for retrieving the macro, and the likelihood value of the semantic macro version in the DLMF. Finally, we iterate over every in-going dependency of the MOI and recursively apply the same process to each of the dependant MOIs. For the equation above, this recursive behavior would not be necessary since every component of the equation is already mentioned in the same sentence. However, consider as a counterexample the next equation in the same article

In the context of this equation, neither the Jacobi polynomial nor the Gamma function is mentioned in the sentence. However, iterating over the ingoing dependencies reveals from later in the article and from the introduction. Both MOI are annotated with the necessary descriptions to retrieve the replacement patterns for the gamma function and the Jacobi polynomial. Finally, we order the list of retrieved replacement patterns according to their scores. The score for a single replacement pattern is the average of the scores from each part of the retrieval pipeline. Those are (1) the noun phrase score for the given formula calculated from MLP, (2) the relative Elasticsearch score of the retrieved semantic macro replacement patterns, and the DLMF likelihood values for each generic LaTeX pattern.

Finally, we apply each replacement pattern that we extracted. The replacement patterns for \JacobipolyP, \Pochhammersym, and \genhyperF matched parts in the original LaTeX for the definition above. The original LaTeX looks like this:

P_n^{(\alpha,\beta)}(z)=\frac{(\alpha+1)_n}{n!}{}_2F_1\left(-n,1+\alpha+\beta+n;\alpha+1;\tfrac{1}{2}(1-z)\right)

The replacement patterns replaced each part with the semantic macros. Hence, the semantically enhanced (or simply semantic LaTeX) looks like this:

\JacobipolyP{\alpha}{\beta}{n}@{z} = \frac{\Pochhammersym{\alpha + 1}{n}}{n!}\genhyperF{2}{1}@{-n,1+\alpha+\beta+n}{\alpha+1}{\tfrac{1}{2}(1-z)}

Every semantic macro in this expression is supported by LCT. Hence, we can now use LCT to translate it to Maple

JacobiP(n, alpha, beta, z) = (pochhammer(alpha + 1, n))/(factorial(n))*hypergeom([- n , 1 + alpha + beta + n], [alpha + 1], (1)/(2)*(1 - z))

and Mathematica

JacobiP[n, \[Alpha], \[Beta], z] == Divide[Pochhammer[\[Alpha]+ 1, n],(n)!]*HypergeometricPFQ[{- n , 1 + \[Alpha]+ \[Beta]+ n}, {\[Alpha]+ 1}, Divide[1,2]*(1 - z)]

In addition, we automatically evaluate the equation numerically and symbolically as described in HIDDEN-REF. Both, Maple and Mathematica, symbolically and numerically verified that the equation was correct. The evaluation results for this particular example can be found on the demo page for the equation above: [2].

Evaluation Analysis

In this section, we refer to a benchmark entry with the associated ID (as in Table~\ref{tab:benchmark-examples}). The full benchmark dataset is accessible via our demo page\footnote{\url{https://sigir21.wmflabs.org} [accessed 01/02/2021]}.

Since the benchmark contains randomly picked formulae from the articles, it also contains entries that might not have been properly annotated with math-templates or math-tags in the Wikitext. Four entries in the benchmark (28, 43, 78, and 85) were wrongly detected by our engine and contained only parts of the actual formula. For example, entry 28 was extracted as _{1}(z) = from the Polylogarithm article because the formula was given in plain text <nowiki>Li<sub>1</sub>(''z'') = -ln(1-''z'')</nowiki>. While our approach correctly identified <nowiki><sub>1</sub>(''z'') =</nowiki> and (1-''z''), it did not identify the plain text words Li and ln as part of the formula. Some translations also failed due to inappropriate \LaTeX{} expressions. For example, the hypergeometric functions have an index prior to the identifier , such as in . Hence, in many cases, the subscript was attached to the previous token, such as equal signs (84) or asterisks for multiplications (75, 83), rather than using an empty expression {}_{1}. In one case (85), the editor split the equation into two parts via <nowiki></math><math></nowiki> to avoid the problem with the leading underscore.

Besides the wrong identification, we identified different failure reasons for a translation to semantic \LaTeX{} or CAS. In the following, we discuss the main reasons and possible solutions to avoid them, ordered by their impact on translation performance.

Definitions and Substitutions

Recognizing an equation as a definition would have a great impact on performance. As a test, we manually annotated every definition in the benchmark by replacing the equal sign $=$ with the unambiguous notation $:=$ and extended \LaCASt{} to recognize such combination as a definition of the left-hand side\footnote{The DLMF did not use this notation, hence \LaCASt{} was not capable of translating $:=$ in the first place.}. This resulted in 18 more correct translations (e.g., 66, 68, and 75) and increased the performance from $.28$ to $.47$.

Identifying definitions in a document would also solve the problem of substitutions. Consider, for example, the benchmark entry 3 containing the elliptic integral of the first kind , from the article about Elliptic integrals. Here, $x$ was defined as the Jacobian elliptic function $\operatorname{sn}(u,k)$ immediately above this equation. Hence, an appropriate translation of $F(x; k) = u$ to Mathematica would be

EllipticF[ArcSin[JacobiSN[u, (k)^2]], (k)^2] == u.

While \LaCASt{} is capable of performing such complex translation from semantic LaTeX, we were not able to detect the substitution of .

The dependency graph may provide beneficial information towards a definition recognition system for equations. However, rather than presuming that every equation symbol is indicating a definition~\cite{KristiantoTA17}, we propose a more selective approach. Considering one part of an equation (including multi-equations) as an extra MOI would establish additional dependencies in the dependency graph, such as a connection between $x = \operatorname{sn}(u,k)$ and $F(x; k) = u$. A combination with recent advances of definition recognition in NLP[1] may than allow us to detect $x$ as the defining element. The already established dependency between $x$ and $F(x; k) = u$ can finally be used to resolve the substitution. Hence, for future research, we will elaborate the possibility of integrating existing NLP techniques for definition recognition~\cite{AnkeS18,GinevM20} into our dependency graph concept.

Missing Information

Another problem that causes translations to fail is missing information. For example, the gamma function seems to be considered common knowledge in most articles on special functions because it is often not specifically declared by name in the context (e.g., 19, 31, and 53). To test the impact of considering the gamma function as common knowledge, we added the description `\textit{Euler gamma function}' to the list of noun phrases that fetch semantic macros from the database. Adding a low score ensures the pattern for the gamma function will be applied late in the list of replacement patterns. This indeed improved performance slightly, providing us a successful translation of three more benchmark entries (19, 56, and 93). Additionally, it improved the semantic \LaTeX{} in three other entries (22, 31, and 53) but these cases had other issues that prevented a successful translation. This naive approach, emphasizes the importance of knowing the domain knowledge for specific articles. Schubotz et al.~\cite{Schubotz16} utilized namespaces of mathematical expressions to improve definiens extractions for identifiers. These namespaces may allow us to activate the feature we used to test the impact of the gamma function more precisely in certain cases and deactivate it in others.

Non-Matching Replacement Patterns

An issue we would more regularly face in domains other than special functions and orthogonal polynomials are non-standard notations. For example, for six entries (18, 44, 59, 62, 77, and 87) we were not able to appropriately replace hypergeometric functions with semantic macros because they used the matrix and array environments in their arguments, while the DLMF (as shown in Table~\ref{tab:generic-semantic-mapping}) only uses \verb|\atop| for the same visualization. Consequently, none of our replacement patterns matched even though we correctly identified the expressions as hypergeometric functions. The entries 3 (from above) and 54, illustrate how minor errors already cause a failure in the semantic enhancement process. In the DLMF, the elliptic integral only uses a comma to separate the arguments. Hence, the appropriated semantic macro did not match $F(x; k)$. Similarly, the hypergeometric function in entry 54

inappropriately uses commas to split the arguments. Hence, again, none of the replacement patterns matched the expression. For an appropriate translation, one must derive the $1$ and $2$ from ${}_1F_2$ in order to extract the correct arguments.

One idea for solving these issues is to add more replacement rules to a macro. Greiner-Petter et al.~\cite{GreinerPetter2020} presented a search engine for MOI that allows searching for common notations for a given textual query. Searching for Jacobi polynomials in arXiv.org shows that different variants of $P_n^{(\alpha, \beta)}(x)$ are highly related or even equivalently used for Jacobi polynomials, such as $p$, $H$, or $R$ rather than $P$. However, widening the replacement patterns further may harm performance, as seen Table~\ref{tab:eval-semantic-trans} for higher numbers of retrieved noun phrases and macros.

Derivatives and Prime Notations

A related problem that we encountered with our implementation of replacement patterns are primes and derivative notations. Primes for differentiation are generally put in between the function identifier and its argument, e.g., $\operatorname{Ai}'(x)$. Unfortunately, this causes the replacement patterns to fail because \verb|Ai(var1)| did not allow tokens in between. We can avoid this by adding support for optional tokens to our pattern matching implementation. Hence, we would rather generate the pattern \verb|Ai opToken (var1)|.

The DLMF adds primes between the semantic macro and its arguments as well, e.g., \AiryAi'@{z}. Hence, we can also simply put this optional token into the semantic \LaTeX{} expression.

Synonyms

One minor issue we faced were synonyms. For example, entry 51 discusses `\textit{Kravchuk polynomials}' while the DLMF using the different transliteration `\textit{Krawtchouk polynomials}'. Hence, our pipeline was unable to find the correct semantic macro for the polynomials. Since we already use Elasticsearch (ES) to search for semantic macros, and ES is capable of handling synonyms, we only need to feed the database with common synonyms. In this case, the Wikipedia article itself mentions the alternative transliteration at the beginning of the article. We could extract these synonyms while analyzing the Wikitext and adding them to the semantic macro database.

- ↑ AnkeS18,GinevM20,VanetikLSR20,Kang2020